Fast Changes in Digital Content

With the growth of the digital world the facets, and sort, of Not Safe for Work (NSFW) guidelines/warning have come to adapt just as quickly. As such, an AI system which a content moderation system uses faces an uphill challenge as it rolls out, evolves and must always have the capacity to quickly learn new types of inappropriate materials and manage these appropriately.

Improving AI with Sophisticated Learning Algorithms

The elasticity of adaptation for AI heavily relies on machine learning(ML) algorithms, especially deep learning which offers the ability for systems to learn from an increasing amount of data constantly. For instance, models for content moderation may be trained on datasets of millions of labeled images and videos that are updated regularly with new NSFW content. They are the mechanism by which AI is trained to recognize novel patterns over time and adjust to new types of content. For now, AI systems can already achieve 90-95% accuracy in detecting known types of explicit content.

Incorporating User Feedback

Incorporating user feedback is critical to adapt AI systems to other new NSFW content. Platforms can collect data, which in turn can allow you to strengthen our AI models, by permitting users to flag misplaced or improperly categorized content. These real-time annotations not only help in making the content accuracy higher but also enable a feedback loop for AI systems to learn from the real world, an indispensable part of any NSFW system that wants to keep up with the latest and necessary to counter emerging NSFW trends.

Cross-Domain Data Transmission

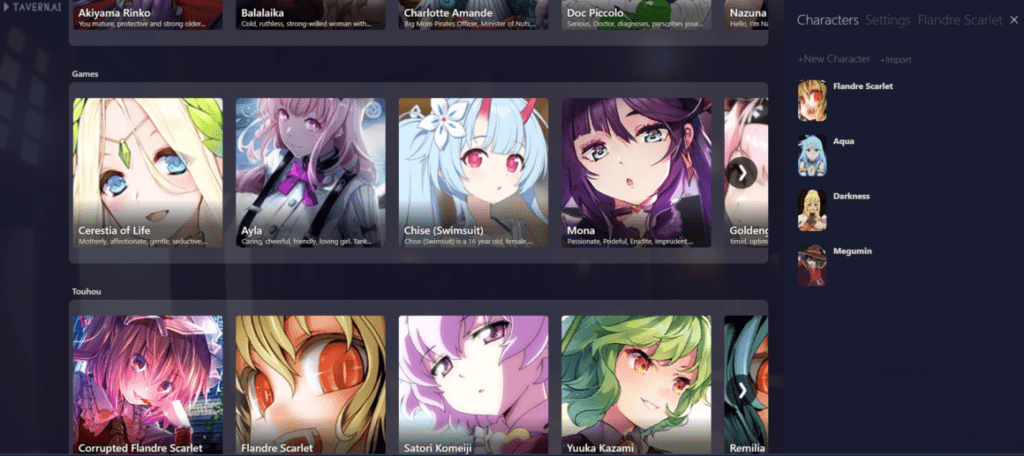

The use of cross-domain data transfer techniques can enable AI to evolve similarly to cope with new forms of NSFW content. The idea is to take data and learning from one area (like social media) and apply its principles to another (such as gaming). It strengthens generalization It makes the AI generalize not just from pornography, but also from hard scene_texture and hard_obj, broadening cross-category exposure and providing more signal to be able to cover anomalous new form of inappropriate content.

Joint Measures for Enhanced Training

The interoperability of AI can be greatly improved if AI is trained on anonymized data and this data is shared across different platforms. By working together to share resources and knowledge, AI developers can create more affluent models that are able to address the many ways in which NSFW content can manifest and be shared across the web.

Ethical Issues and Transparency

With how AI can develop to more vulgar genres partaking to NSFW, the duty of ensuring a respect to an ethical standard and being transparent are utmost important. This extends to ensuring AI systems do not further biases, and *ahem* privacy. AI developers must be transparent in how their systems are trained and adapted, enabling trust and accountability.

Next Directions On AI Adaptation

The future of AI in NSFW content moderation will be characterized by more advanced technologies - such as contextual understanding and predictive analytics - that can detect and manage fresh trends before they become mainstream. These advances bring much-needed improvements to AI functionality, which is necessary to track the ever-evolving nature of digital content.

The ability of AI to adapt to new forms of NSFW content is essential to the continued creation of an online environment which both secure and respects all users. The more AI tech advances, the better it will be able to handle these challenges, making it anything but an outdated technique for digital content moderation.

For a more detailed analysis of how it work using NSFW content, go to nsfw character ai.